This is the fourth and final post of a multi-part series suggesting an ALM process in projects where Microsoft Dynamics CRM is used as a data store.

In my previous blog post, I explained about including Microsoft Dynamics CRM customisations in your Team Build, how to structure CRM customisations and scripts in TFS and how to produce a deployable managed and/or unmanaged solution as an output. In this post, I will write about a deployment process that enables you to deploy the package produced from Team Build to a target environment.

Deployment Overview

The importance of having a reliable, repeatable and well-documented deployment process cannot be understated. Deployment should be planned from the very outset of starting the project scaling it up from a single machine environment to test and staging environments eventually scaling it up for production. Having a repeatable process prevent surprises in the all important go-live process. It also allows you to make regular continuous deliveries.

In this scenario, we are considering deploying a new CRM solution to a new CRM target environment that is to say we are not upgrading to an existing system or deploying to an existing CRM instance. The deployment involves the following steps

- Create new CRM Organisation

- Set CRM organisation settings such as Currency, Time Zone, etc.

- Import Data Maps required before importing CRM Solution

- Import Data required before importing CRM Solution.

- Import CRM Solution

- Import Data Maps for initial data population.

- Import Data for initial data population.

- Publish SSRS reports.

- Import Team Associations.

- Publish workflows.

All the steps apart from step (1) and (4) are optional and applicable only if your CRM customisations require it.

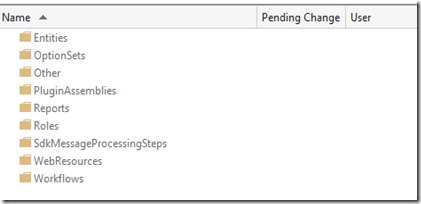

In my last post, I suggested to structure CRM deployable in the following format and we will use the same when writing our deployment scripts.

![[Sample%2520CRM%2520Folder%2520Structure%255B2%255D.jpg]](http://lh6.ggpht.com/-9yFFneWnqc0/UyZVnb1qRkI/AAAAAAAAExg/2B-fWZEkLzA/s1600/Sample%252520CRM%252520Folder%252520Structure%25255B2%25255D.jpg)

For my deployment scripts, I will use MSBuild using a library called MSBuild Extension Pack. The library provides a rich set of functionality and the March release of the library has Tasks for Microsoft Dynamics CRM as well.

Sample Deployment

Following is the sample listing of the deployment process listed above. For simplicity, I have only included steps 1, 2, 5, 6 and 7 of the above mentioned process.

<Target Name="DeployCrmOrganisation64">

<!-- Creating Crm Organisation-->

<MSBuild.ExtensionPack.Crm.Organization TaskAction="Create" DeploymentUrl=http://CRMServer/XRMDeployment/2011/Deployment.svc Name="organization1" DisplayName="Organization 1" SqlServerInstance="MySqlServer" SsrsUrl="http://reports1/ReportServer" Timeout="20" /><!-- Update an Organization's Settings -->

<ItemGroup>

<Settings Include="pricingdecimalprecision">

<Value>2</Value>

</Settings><Settings Include="localeid">

<Value>2057</Value>

</Settings><Settings Include="isauditaneabled">

<Value>false</Value>

</Settings>

<ItemGroup><MSBuild.ExtensionPack.Crm.Organization TaskAction="UpdateSetting" OrganizationUrl="http://CRMServer/organization1" Settings="@(Settings)" />

<!-- Import Solutions –>

<MSBuild.ExtensionPack.Crm.Solution TaskAction="Import" OrganizationUrl=”http://CRMServer/organization1” Name="CrmSolution" Path="C:\Solutions" Extension="zip" OverwriteCustomizations="true" EnableSDKProcessingSteps="True" />

<!—Import Data Map-->

<MSBuild.ExtensionPack.Crm.DataMap TaskAction="Import" OrganizationUrl="http://CRMServer/organization1" Name="Organization1" FilePath="C:\DataMapFile1" /><!—Import Data-->

<MSBuild.ExtensionPack.Crm.Data TaskAction="Import" OrganizationUrl="http://CRMServer/organization1" DataMapName="Entity1DataMap" SourceEntityName="entity1" TargetEntityName="entity1" FilePath="C:\DataFile1.csv" /></Target>

The first step in the script is creating a new CRM organisation. The task used is “MSBuild.ExtensionPack.Crm.Organization” with a task action of “Create”. It takes a parameter the CRM instance’s deployment URL, the name and display name of the organisation as well as the name of SQL Instance and SSRS instance. The time out parameter is optional and I am specifying it to prevent the deployment script to wait indefinitely.

Once the organization is created, the next step is to set certain organisation settings. Again the task “MSBuild.ExtensionPack.Crm.Organization” with task action “UpdateSetting” allows this. The task takes in an ItemGroup of setting names and values as parameter.

The next step in to import a managed solution into the newly created organization. For this the task used is “MSBuild.ExtensionPack.Crm.Solution” with task action of “Import”. The task requires the path where the solution file is placed, the name and extension of the solution file. Also required are parameters to specify whether to overwrite any already existing customisation in the target organisation and also whether to trigger CRM Plug-ins and workflows as the solution is imported.

The final two steps are simply importing a data map and a data file to the newly created organisation. The parameters are self-explanatory. MSBuild extension pack contains some other useful CRM tasks. For more details read the project documentation at http://msbuildextensionpack.com/.

This culminates our discussion about ALM process for solutions involving Microsoft Dynamics CRM. I hope you find this series useful and do give your feedback.