This is the second of a multi-part series suggesting an ALM process in projects where Microsoft Dynamics CRM is used as a data store

In my

previous blog post, I wrote about establishing an ALM process for projects involving Microsoft Dynamics CRM. The greatest challenge in projects with Microsoft Dynamics CRM is ensuring that the system is restored to a known baseline state and the deployment process is applied such that it deploys code, customisations and data in a repeatable and reliable way. In my post, I wrote about three constituent pieces of the ALM process

- Development Build

- Team Build

- Deployment

In this post, I will elaborate on the “development build” part of the process.

Development Build

The purpose of the development is threefold

1) To ensure that the complete solution can be compiled end-to-end - Usually, a typical software solution consists of more than one visual studio solution such that solutions have inter-dependencies i.e. libraries from one solutions are used by other solutions. Before checking-in changes, a developer needs to ensure that there are no build breaks in any of the dependent solutions.The Dev Build will build all the visual studio solutions in order, placing the output of each to the location where the dependant solutions are referencing them from.

2) Setup a complete isolated environment locally for developers – Usually a software solution would have quite a few artefacts such as Active Directory users/groups, databases, web services, windows services, etc. Typically the developer at a time would be working on one part of it. The development build would set up his a scaled down system allowing him / her to test their work area without having to rely on an integration environment.

3) Run Integration Tests - Executing Integration tests in one form or another is vital in ensuring that developers are not destabilizing the system as they check-in. This is specially important for bigger teams. Some would argue that this should happen in Continuous Integration builds and in the Continuous Deployment process. In my experience, leaving it ONLY in continuous deployment process makes finding errors more difficult and result in a large number of BVT (Build Verification Testing) failures.

CRM Development Build

If Microsoft Dynamics CRM is part of your end-to-end solution, you can include it in the development build process in one of the following two ways

1) Have a local installation of Microsoft Dynamics CRM on your machine. Each run of development build, will compile the Dynamics CRM code base and deploy a new CRM Organisation using the deployment scripts. The advantage of this approach is that you are always working from checked-in code and can be certain that what you have got in your development machine is what will be deployed to your test environments.

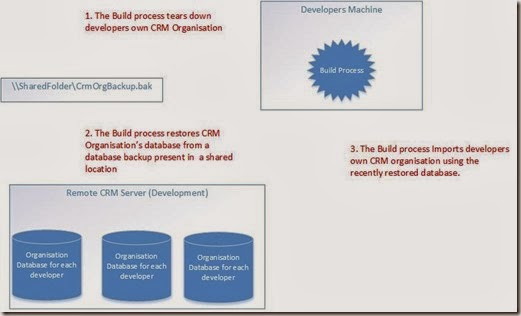

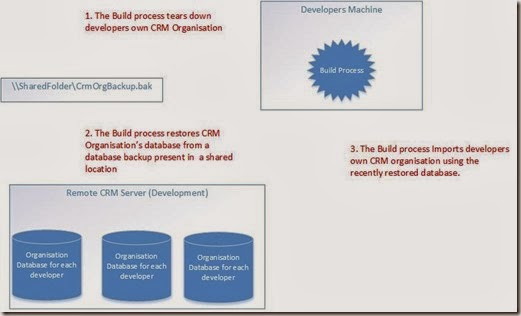

2) Have your own CRM Organisation in a shared “development” CRM server. With this, your CRM team will maintain a database backup of a stable organisation that they have deployed to using CRM deployment scripts. Your development build will restore this database and import it to your organisation.

Given the effort and resources needed to set up Dynamics CRM and the CRM SDK being required to build CRM codebase, I prefer option 2. The downside is that you be relying on your CRM team to provide a stable organisation. However, the advantages are not needing the CRM development tools and a quicker development time.

The following diagram illustrates how CRM organisation is imported during the build process.

Like any other ALM processes, the development build process should be repetitive. This means that it should contain the following sequence of actions

- Tear Down

- Build

- Deploy

- Start

- Test

Or, it would roughly be something like following (deliberately remove tear down and deployment of other artefacts to keep it simple)

<Target Name="Build" DependsOnTargets="TearDownCrm;Build;DeployCrm"/>

The Teardown script would involve running the PowerShell commandlets on the remote CRM server. For this remote power shell should be enabled on the server. Once it is enabled, you can use the following task MSBuild Task to execute PowerShell commandlets on the CRM server remotely

<UsingTask TaskName="PSExecTask" TaskFactory="CodeTaskFactory" AssemblyFile="$(MSBuildToolsPath)\Microsoft.Build.Tasks.v12.0.dll" >

<ParameterGroup>

<Server ParameterType="System.String" Required="true" />

<Command ParameterType="System.String" Required="true" />

<Args ParameterType="System.String" Required="false" />

<FailOnError ParameterType="System.Boolean" Required="false" Output="false"/>

<ExePath ParameterType="System.String" Required="true" Output="false"/>

</ParameterGroup>

<Task>

<Using Namespace="System"/>

<Using Namespace="System.IO"/>

<Using Namespace=" System.Diagnostics"/>

<Code Type="Fragment" Language="cs"> <![CDATA[

ProcessStartInfo start = new ProcessStartInfo();

start.Verb = "runas";

start.FileName = ExePath; // Specify exe name.

Log.LogMessage(@"\\" + Server + @" " + Args + " " + Command);

start.Arguments = @"\\" + Server + @" " + Args + " " + Command;

start.UseShellExecute = false;

start.RedirectStandardOutput = true;

start.RedirectStandardError = true;

try

{

using (Process process = Process.Start(start))

{

using (StreamReader reader = process.StandardOutput)

{

string result;

result = reader.ReadToEnd();

Log.LogMessage(result);

}

if ((process.ExitCode != 0) && (FailOnError == true))

{

Log.LogError("Exit code = {0}", process.ExitCode);

}

else

{

Log.LogMessage("Exit code = {0}", process.ExitCode);

}

}

}

catch (Exception ex)

{

Log.LogError("PSExec task failed: " + ex.ToString());

}]]>

</Code>

</Task>

</UsingTask>

The Teardown script is shown below

<Target Name="TearDownCrm” Condition="’$(SkipCrmDeployment)’ != ‘true’”>

<PSExecTask Server="$(CRMWEBComputerName” Condition="powershell Add-PSSnapin Microsoft.Crm.Powershell; Disable-CrmOrganisation $(CrmNewOrganisationName); Remote-CrmOrganisation $(CrmNewOrganisationName)” ExePath="$(PsExec)">

<MSBuild.ExtensionPack.SqlServer.SqlExecute TaskAction="Execute"

CommandTimeout="120"

Retry="true"

Sql="ALTER DATABASE $(CrmNewDatabaseName) SET SINGLE_USER WITH ROLLBACK IMMEDIATE; DROP DATABASE $(CrmNewDatabaseName);"

ConnectionString="$(CrmDatabaseServerConnectionString)"

ContinueOnError="true"/>

</Target>

The variables used in the script are pretty much self-explanatory. Note the Continue On Error in tear down. This is done because for the first run of the build there won’t be any databases or organisation set up.

The Deployment script is shown below

<Target Name="DeployCrm" DependsOnTargets="RestoreCrmOrganisationDatabase;

ImportCrmOrganisation

Condition="'$(SkipCrmDeployment)' != 'true'" />

<Target Name="RestoreCrmOrganisationDatabase">

<MSBuild.ExtensionPack.SqlServer.SqlExecute TaskAction="Execute"

CommandTimeout="120"

Retry="true"

Sql="IF EXISTS(Select * from sysdatabases WHERE NAME LIKE '$(CrmNewDatabaseName)') ALTER DATABASE $(CrmNewDatabaseName) SET SINGLE_USER WITH ROLLBACK IMMEDIATE; RESTORE DATABASE $(CrmNewDatabaseName) FROM DISK = N'$(CrmDatabaseBackupFile)' WITH REPLACE, FILE = 1, MOVE N'MSCRM' TO N'$(CrmDatabaseDataFileLocation)\$(CrmNewDatabaseName).mdf', MOVE N'MSCRM_log' TO N'$(CrmDatabaseDataFileLocation)\$(CrmNewDatabaseName)_log.ldf';"

ConnectionString="$(CrmDatabaseServerConnectionString)"/>

</Target>

<Target Name="ImportCrmOrganisation">

<Copy SourceFiles="$(MSBuildProjectDirectory)\Resources\$(CrmUserMappingFile)" DestinationFiles="$(CrmFileStore)\$(CrmNewOrganisationName).xml"/>

<MSBuild.ExtensionPack.FileSystem.Detokenise TaskAction="Detokenise" TargetFiles="$(CrmFileStore)\$(CrmNewOrganisationName).xml" DisplayFiles="true"/>

<PSExecTask Server="$(CRMWEBComputerName)"

Command="powershell $(CrmImportOrganisationScriptPath) -sqlServerInstance '$(CrmSqlServerInstance)' -databaseName '$(CrmNewDatabaseName)' -reportServerUrl '$(CrmReportServerUrl)' -orgDisplayName $(CrmNewOrganisationName) -orgName $(CrmNewOrganisationName) -userMappingXmlFile '$(CrmFileStore)\$(CrmNewOrganisationName).xml'"

ExePath="$(PSExec)"/>

</Target>

The deployment involves restoring database, which is done using the “SqlExecute” task in MSBuild Extension Pack. Once the database is restored, the next action is to execute the “Import-CrmOrganisation” Commandlet on the remote server.Once imported, the organisation will be created and available for developer from the database backup.

In the

next post, I will discuss about setting up Team Build for solutions containing Microsoft Dynamics CRM

![[Sample%2520CRM%2520Folder%2520Structure%255B2%255D.jpg]](http://lh6.ggpht.com/-9yFFneWnqc0/UyZVnb1qRkI/AAAAAAAAExg/2B-fWZEkLzA/s1600/Sample%252520CRM%252520Folder%252520Structure%25255B2%25255D.jpg)

![clip_image001[7]_ca051aed-1e8f-4503-bfbd-2634176e8c95 clip_image001[7]_ca051aed-1e8f-4503-bfbd-2634176e8c95](http://lh4.ggpht.com/-BGjEeECNwx4/UthhskPJnQI/AAAAAAAAEuY/YDh6aJ8J-IY/clip_image001%25255B7%25255D_ca051aed-1e8f-4503-bfbd-2634176e8c95_thumb%25255B4%25255D.png?imgmax=800)